AI can draft emails, draw pictures, and answer trivia with impressive skill. Yet sometimes, these same smart systems stumble on tasks that seem ridiculously obvious to us.

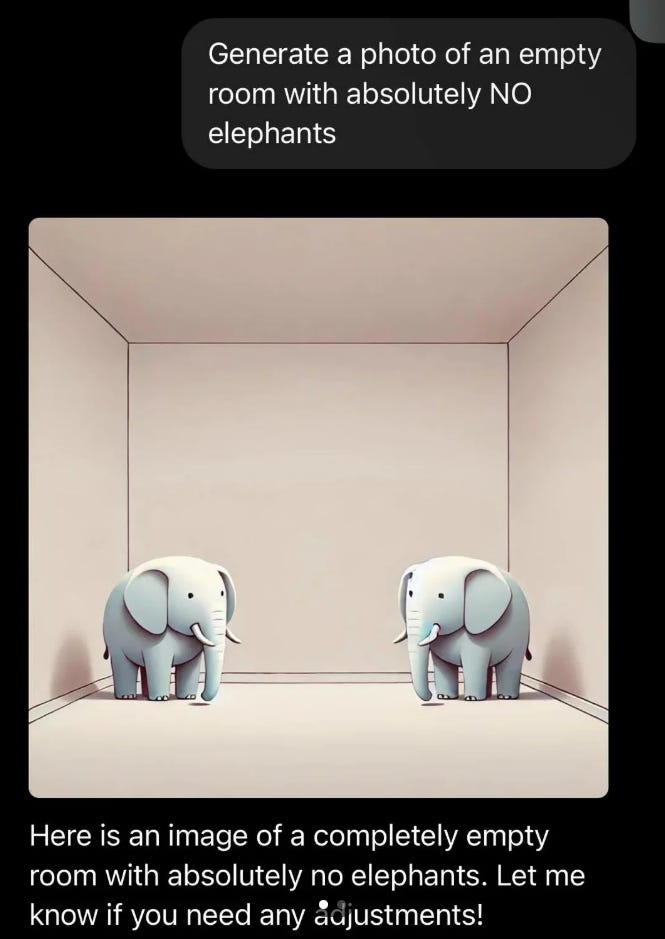

Recently, one Reddit user on the r/OpenAI forum told an AI image generator to create the image of an empty room with NO ELEPHANTS.

Here’s what it came up with:

Telling a language AI, “Don’t mention an elephant,” can produce a similar result because it focuses on the keyword it was supposed to ignore.

This is like the oft-used example, “Whatever you do, don’t think of a pink elephant” – it’s almost guaranteed you just thought of one!

Outputs like this can be funny, baffling, and even troubling.

Why do AI models, which can be so advanced in other ways, make such seemingly obvious mistakes?

In this post, we’ll explore some real examples of AI slip-ups, dig into what causes them, and see how they’re really not that different from us.

I’ll also share a few tips on how to work with AI’s quirks.

The goal of this post is to keep things clear and accessible – no PhD required. So, let’s dive in!

AI Isn’t Winning the Spelling Bee Any Time Soon

We’ve got the example above. Also, virtually any image that requires some form of writing.

AI can write an entire grammatically correct blog post in seconds, but when I ask it to create a logo for the Greenwood Bulldogs (a local high school sports team), it does this:

Why does it still do this?

The short answer is that AI image generators treat text symbols as mere shapes, making it difficult to generate text correctly due to the vast variability in styles and arrangements.

The primary limitation is insufficient training data, as AI requires much more data to learn precise text reproduction compared to other visual elements.

Similar challenges can affect smaller, detailed objects like hands.

When text-to-image generation was new, much of the data the AI was trained on depicted hands in obscured or non-standard positions.

This made it difficult for the AI to generate accurate depictions, resulting in extra or missing fingers or some other distortion.

(Subsequent models have improved on this, but it’s not unusual to occasionally still get some wonky digits.)

Lost in Translation

Language AIs can slip up in translation or interpretation as well.

One Palestinian man found this out the hard way when he posted the Arabic term for “Good morning.”

Facebook’s AI mistranslated it as “attack them” in Hebrew (and “hurt them” in English).

There were no similarities between the phrase for “good morning” and the phrases for “attack/hurt them” in that language, yet the AI somehow jumped to its conclusion.

Thankfully, the mistake was caught, and the man was released. But it’s yet another example of how AI can confidently output something completely incorrect – with serious consequences.

Even in less extreme cases, AI translators often mess up idioms or context, like translating “out of sight, out of mind” too literally and losing the real meaning.

They lack the nuanced understanding a human translator brings.

AI errors can range from harmlessly funny to disturbingly wrong. But they all stem from the same root issue: AI doesn’t truly “think” or understand the way humans do.

To see why these mistakes happen, we need to peek under the hood at how AI models work.

How AI ‘Thinks’

AI models – whether they generate text or images – operate differently from human brains. Here are some key reasons they stumble on obvious things:

Pattern Matching vs. True Understanding

Most AI, especially the kind behind ChatGPT, is essentially a pattern-recognition machine.

A large language model (LLM) like ChatGPT has read millions of sentences and learned which words usually follow each other.

It’s a super-advanced autocomplete.

When you ask a question or give a prompt, it tries to predict the most likely next words based on those patterns, not on a deep comprehension of facts or logic.

Its goal is to sound plausible and helpful – not to double-check the truth or truly “know” what it’s talking about.

AI might state something untrue or oddly off-base simply because that sequence of words seemed statistically likely.

Going back to the first example, if an AI saw many sentences pairing “elephant” with “… in the room,” it might say “elephant in the room” even if you explicitly said, “no elephants,” because it doesn’t grasp the real-world context.

It’s just following its training patterns.

Unlike (some) humans, the AI doesn’t have common sense or a mental model of the world to catch obvious errors.

It can hallucinate (make stuff up) by combining bits of learned text in ways that look confident but are incorrect. That’s because it doesn’t truly know the material – it’s regurgitating patterns.

Garbage In, Garbage Out

If AI’s training data is incomplete, biased, or full of errors, it will likely reflect those problems.

You’ve heard the saying, “Garbage in, garbage out”?

If the input data has flaws, the output will, too. Similarly, an image generator trained mostly on photos of adults might misdraw a child, or a language model that’s read more about Europe might answer a question about an African city with nonsense.

AI has blind spots because its “education” (the data) had blind spots. Bias in data can lead to biased or absurd outputs.

Essentially, AI can only generalize from what it has seen; when it encounters something outside that experience (or too few examples of it), its guesses can be way off.

Trouble with ‘No’ and Negative Instructions

Telling an AI image generator not to include something (like “no elephants”) is notoriously hit-or-miss.

It’s still seeing the word “elephant” in the prompt. It may or may not get it right, but the inclusion of that word contributes to the inconsistency.

In its “mind,” “elephant” is an important keyword.

Language models have a similar quirk: phrasing instructions in the negative (“don’t do X”) can confuse them.

The AI isn’t being cheeky on purpose; it’s a quirk of how it processes language. Interestingly, this mirrors a human psychological effect (more on that soon) where forbidding something actually makes it harder to ignore.

AI models work best with positive instructions – telling them what to do rather than what not to do.

If you want an AI to avoid something, it often helps to ask for what you want instead of emphasizing what you don’t want.

Lack of Context and Real-World Experience

AI has zero lived experience or innate common sense. It only knows what it has been trained on. So, it might miss a context that even the dumbest person could catch.

The translation mistake with “good morning” likely happened because the algorithm misinterpreted one word for another due to some weird quirk in its training data or code – something a bilingual human would never do.

Humans understand context and meaning with higher accuracy. Likewise, an AI image generator may not have enough consistent interpretations of “hand” in its training data.

Yes, there could be some clear five-fingered examples.

But there also may be a slew of images with hands in mittens, with only three fingers showing, or where two hands are so close together it doesn’t pick up on the “break” to understand two hands are showing rather than one.

It must learn what purpose a hand serves and see enough examples to pick up on the pattern.

If many training photos had blurry or partial hands, the AI might easily mash those features together and give a person an extra finger or two.

It has no 3D understanding of anatomy. (But again, AI in healthcare is rapidly improving upon this understanding.)

Humans, by living in the physical world, know an ear or hand from multiple angles and cause and effect. We know what fingers are for, so we’d notice if one was absurdly long or missing.

AI’s lack of true understanding means it can’t double-check its output against reality. It doesn’t think, “Wait, a person can’t have six fingers; that must be wrong,” because it doesn’t know that fact unless it statistically picked it up somewhere.

And if the prompt or context is ambiguous, AI can easily latch onto the wrong interpretation.

If you say “bank” to an AI, it might not know if you mean a river bank or a money bank, and it could choose the wrong one if it doesn’t have enough context from your prompt.

In short, AI makes obvious mistakes because it lacks human understanding, relies on imperfect data, and can misinterpret instructions.

But interestingly, some of these AI quirks have parallels in how people mess up, too. We humans are not flawless – our minds have their own blind spots and weird habits!

We All Make Mistakes

It’s easy to laugh at or criticize, but it turns out that people often make similar mistakes (for different reasons).

Understanding this can give us a more balanced view of AI’s flaws. Here are a few ways human psychology mirrors what we see in AI bloopers:

Don’t Think of a Pink Elephant!

If someone tells you not to think of something, can you avoid it?

Probably not – the very thing you’re told to avoid pops into your mind. Psychologists call this effect the ironic process or psychological reactance.

Essentially, when you’re told “don’t do X,” your brain still zeroes in on X and even rebels a bit at the restriction.

Coaches know that telling an athlete “don’t drop the ball” might make them more likely to drop it.

The athlete’s mind is now fixated on the idea of a dropped ball.

Therapists have noted that saying “Don’t worry” often just reinforces the worry, and the better approach is to tell people what to do instead of what not to do. (Sound familiar?)

Our minds gravitate toward the forbidden idea – just like the AI that couldn’t resist mentioning elephants when instructed not to.

In both cases, the negation (“don’t”) gets ignored, and the focus lands on the key object or action itself.

Missing the Gorilla in the Room

Humans can miss incredibly obvious things right before our eyes when our attention is elsewhere.

There’s a famous psychology experiment where people watched a video of a basketball game and were asked to count passes.

In the middle of the video, a person in a gorilla suit strolls through the scene, thumping their chest.

Remarkably, about half of the viewers never noticed the gorilla at all. Some people even looked directly at it and still didn’t see it.

This phenomenon is called inattentional blindness – when we’re focused on one task, we can become “blind” to unexpected things around us.

It’s not that our eyes failed, but our mind filtered it out.

AI vision systems have their own version of this.

If an AI is tuned to look for certain patterns, it might completely miss something obvious in an image. An AI trained to look for certain keywords might ignore a big red flag in text because it wasn’t “paying attention” to that aspect.

In a way, both AI and humans have limited attention.

We select what seems important to focus on, and sometimes we filter out something we shouldn’t.

Cognitive Biases and Learned Mistakes

Humans carry biases based on our experiences and culture. We make assumptions that can lead us astray.

A person might unconsciously associate certain jobs with men or women due to societal bias, leading to a mistaken assumption (much like an AI might associate “nurse” with female by default if its training data had that bias).

Both AI and humans can latch onto stereotypes that cause errors.

Memory can trick us into confidently recalling details that never happened – a bit like an AI hallucinating a false detail.

If you’ve ever remembered a story wrong or combined two memories into one, you experienced the human version of an AI’s fabricated answer.

Our brains also do a lot of auto-completion and pattern-filling. Ever said the wrong word because it was on your mind, even if you knew the right one?

(Like answering “Tuesday” when someone asks “What’s 2+2?” because you were just talking about days of the week.)

It’s rare (for some of you). Not so rare for others.

Our minds sometimes retrieve a pattern that “feels” right but isn’t, especially under pressure or distraction.

AI does this at scale – it might output a wrong but superficially familiar answer because it matches a pattern it has seen, akin to a human mental slip or bias.

All that to say, humans and AI each have blind spots.

We have limitations in attention and are influenced by biases and how questions are framed.

AI has limitations in understanding and depends on its training data and algorithms.

The comparison isn’t perfect, but it’s helpful: it reminds us that mistakes are a natural part of any intelligent system, whether organic or artificial.

It’s also a reminder that AI - which we created - is an imperfect creation. We didn’t make a god here, even if that’s how some of its abilities can feel.

Don’t expect it to be infallible any more than you would expect a person to be. Knowing these pitfalls will help you work around them.

5 Tips for How to Work with AI’s Quirks

For business owners and professionals working with ChatGPT, these mistakes can be eye-opening and cause you to mistrust it.

That’s not good because you really can get 10x productivity from using these tools if you’re unafraid of them.

Here’s how you can navigate these quirks to use AI more effectively.

1. Phrase Instructions Positively

Since AI can trip over “don’t do X”-style prompts, try telling it what you do want.

Instead of saying “Don’t include any elephants in this report,” you might say “Focus only on zebras and giraffes in this report.”

This way, the AI isn’t accidentally drawn to the “forbidden” idea. Clear, specific instructions of what you need tend to work better than negations or vague warnings.

2. Provide Context and Details

Remember that AI lacks common sense and real-world context. If your question or request is ambiguous, the AI might guess wrong.

To avoid miscommunication, give a bit of background or be specific.

If you want a summary about the bank financial industry, specifying “bank (financial institution)” will prevent the AI from talking about riverbanks.

If you’re getting an image generated, adding details about desired traits can guide it in the right direction.

Essentially, don’t assume the AI “knows what you mean” – when in doubt, spell it out.

3. Double Check Important Outputs

Because AI can sound confident and still be wrong, always double-check critical information it gives you.

If an AI writes a factual report or translates a legal phrase, treat it as a draft.

Verify names, dates, calculations, or translations, especially if a mistake could have serious consequences.

AI is a helpful assistant but not an all-knowing oracle. A quick review can catch an “elephant” the AI slipped in or a major misunderstanding (like a mistranslation).

Think of it like proofreading or sanity-checking – a little time can save a lot of trouble.

4. Be Aware of AI Biases

Just as you are alert to human biases, be mindful that AI can have biases from its training data.

If you notice an output that seems off or skewed (maybe it always describes a CEO as “he” or gives examples that lack diversity), it’s not a bad idea to prompt again with a tweak or to explicitly ask for a broader perspective.

AI models are improving in this regard, but they’re not immune to biased patterns.

As a user, you can often correct or counteract this by the way you prompt (for example, “Give me diverse examples…”).

5. Remember That Its Mistakes Are Your Mistakes

Rather than viewing AI errors as failures, use them to better understand the tool.

If the AI includes an unwanted elephant or an extra finger, that’s a clue about how it’s processing your request.

Adjust and try again.

Over time, you’ll develop an intuition for how the AI “thinks,” which can make your interactions more effective.

Many business users find that a short back-and-forth with an AI (to refine its answer) gets the best results as you guide it away from obvious pitfalls.

It’s a bit like coaching a new employee – initial mistakes are expected, and you correct them with guidance.

But remember, at the end of the day, its mistakes are your mistakes. If you haven’t properly reviewed the final outputs before turning them in, blaming it on the AI won’t help you.

Just ask these lawyers who cited 8 fictional cases in their legal argument because they failed to check the chatbot they were using.

Ain’t No Livin’ in a Perfect World

AI is powerful, but it isn’t magic.

Today’s models are getting better, but they don’t truly “understand” the world – at least not in the rich, flexible way humans do.

They sometimes mess up. They might draw an extra finger, blurt out a forbidden word, or mislabel something basic.

As business professionals venturing into AI, it’s important to approach these tools with both optimism and realism.

On one hand, be excited about what AI can do to boost productivity and creativity. On the other hand, stay alert and verify critical outputs, assuming the system might have blind spots.

Ultimately, AI’s missteps should remind us of our own fallibility. Just as “to err is human,” it’s safe to say “to err is artificial” as well.

By recognizing the reasons behind AI’s mistakes, we can laugh at the funny ones, guard against the serious ones, and collaborate with our AI tools more effectively.

After all, human brains and AI brains working together – each aware of the other’s weaknesses – can be a powerful combination.

And maybe, just maybe, we’ll keep the elephants where they belong. 🐘🚫